AI Industries need to operate as foundational research labs

As the cycle time between AI research and AI product development shortens, industries which have strong in-house research capabilities, will win in the long-term

Here are some companies which consistently produce the highest quality AI research:

Meta

NVIDIA

Google

Apple

These companies have consistently produced high quality research in several fields ranging from neural networks, LLM pre-training, LLM inference, tokenization and countless others.

Each of these companies invest a huge amount in developing in-house research capabilities.

Why don’t other industries and companies have strong foundational research divisions?

Every industry manager, president or CEO may look at this and be like:

To all the cynics and critics out there, let me justify my stand by answering the below question:

Why should every industry have an exceptional research wing in the AI age?

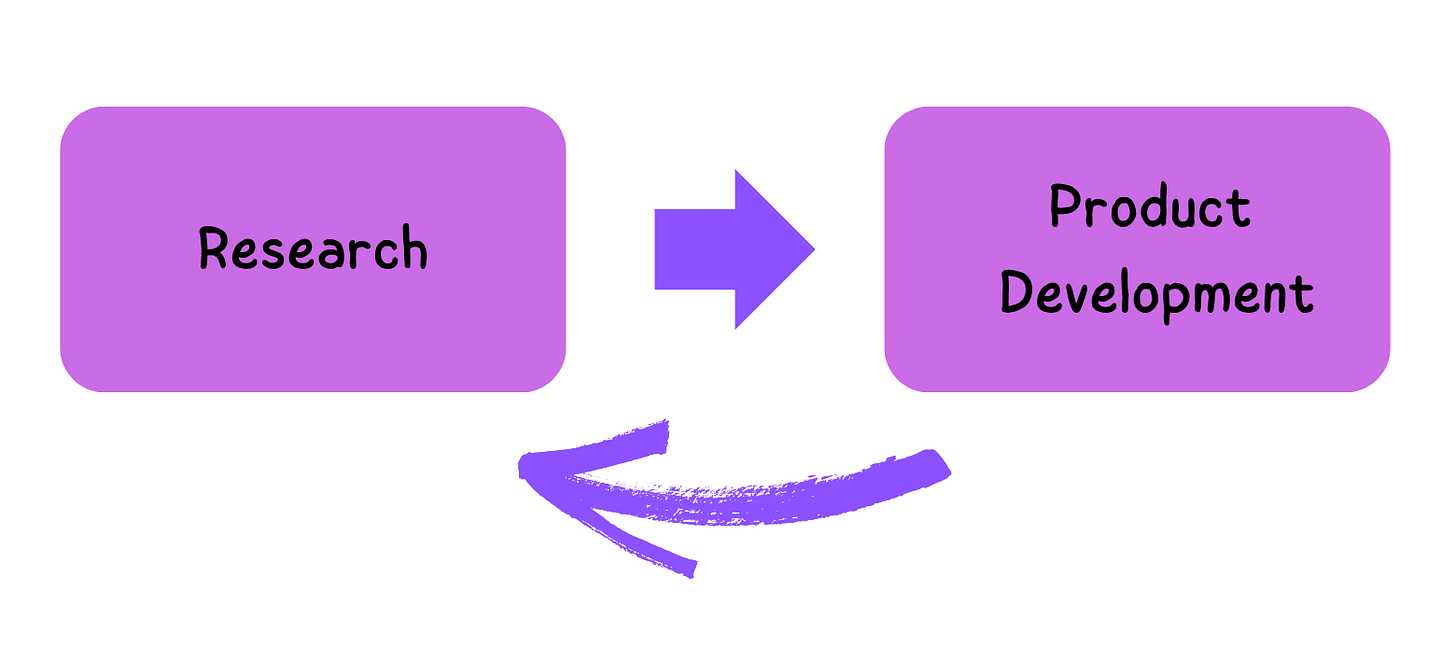

(1) The gap between AI research and AI product is becoming smaller and smaller

If your healthcare company research division builds a new tokenizer which improves LLM inference by 50%, you can directly integrate it into your patient chatbot. This would immediately cut down LLM inference costs.

If your automotive company research division builds a car assistant chatbot in regional languages, you can directly integrate it into your automotive products and improve customer experience.

If your sales division builds an LLM which can summarize customer calls and build targeted marketing strategies, your sales can increase by an order of magnitude.

Do you see what’s happening in all above examples?

In all above examples, there is a flow of research into product development.

Why is this flow possible now and not before?

If your company has been operating for 50-100+ years, we’ll call it a legacy company.

Let’s consider legacy companies: old companies in domains such as automotive, healthcare, manufacturing, chemical etc

Even if you invested in novel research on a manufacturing process or a car chassis etc, it would take a very long time for that research to actually show up in your products.

It did not make too much financial sense for legacy companies to invest in a research workflow which will materialize decades later.

However, with AI and LLMs in particular, the order of magnitude of time it takes for research to materialize into a product is the shortest it has ever been.

There are multiple reasons for this:

Rapid pace of innovation around the world in the field of AI/LLMs

Exponential amount of work done in this field

Rise of Open Source ecosystems

If the time between research and product is short, why don’t all companies do it?

(2) Why don’t companies take advantage of this diminishing gap and setup a strong research-product flow?

The reason for this is that most companies lack strong, foundational research expertise in their organizations.

To build new tokenizers or to build a regional car assistant chatbot or to build a sales summarizing LLM, you need strong researchers in your companies. You need researchers who know how LLMs work from the ground up. You need researchers with very strong AI/LLM knowledge.

Most companies don’t have these researchers or don’t have a culture of foundational research in their company.

(3) What if a company relies on external vendors?

Let’s bring up our manager again who says:

“Why can’t we just outsource this problem and rely on external vendors? It worked before, why not now?”

If your company doesn’t invest in setting up the research-product development flow, the only way you can catch up with the LLM advancements is to rely on external vendors.

There are multiple ways to do this:

Partner with companies who develop chatbots for you

Partner with companies integrate AI solutions into your products

Partner with companies to build LLM solutions for your products

(4) If you outsource in the LLM age, you will lose in the long run

What are the drawbacks of the outsourcing approach?

Money: You will be spending tons of money when you outsource. To do the simplest things, external vendors will charge a lot of money. Information asymmetry and skill asymmetry often leads to this problem.

Data security: Data security is one key drawbacks. Ideally, if you partner with an external vendor, you will need to share your data sources with them. I know many founders and CEOs who are not at all comfortable with this.

Catch up: You will always be playing catch up. Your company will never be at the forefront of cutting edge AI/LLM innovations.

Investing in setting up a strong research-product development flow solves all of the above drawbacks.

(5) Long term benefits of an in-house research ecosystem:

In the long term, the cost of developing an in-house research ecosystem will look much smaller compared to the amount of money you saved in external vendor fees.

Once you have an in-house research team, all data can stay in-house.

Your company can be a research powerhouse which consistently publishes papers in the top AI journals and conferences.

Ultimately, you can exploit the information or skill asymmetry in this field, and outsource your products/research to other companies.

(6) What should companies do to setup this research-product development flow?

Here are some action items:

Companies should setup a strong AI research division. This vision needs to come from the top management.

They should invest in upskilling their AI research division so that the team members have very strong foundational knowledge and they should know how to perform cutting-edge research.

The research division should consistently publish papers in the top AI conferences (NeurIPS, ICML, ICLR, CVPR etc)

Companies should setup a strong bridge between this research division and all other product divisions of the company.

Research should manifest into company products consistently. In addition, the product teams can provide feedback to the research teams and give directions of new problems to work on.

That’s all for now!

Does 'Vizuara' have an In-house Research Team & Can we see some state-of-the-art innovative papers and products in near future?