The DeepSeek Cookbook: Introduction

What this book will be all about and why I decided to write it? I will make posts on this Substack regarding several book chapters.

In this post, you will learn about the following:

(1) What is DeepSeek?

(2) Why DeepSeek was a turning point in AI history?

(3) DeepSeek ingredients we will learn about and the plan for this book

1. What is DeepSeek?

DeepSeek is an AI development company based in Hangzhou, China, founded in May 2023 by Liang Wenfeng. The company specializes in open-source large language models (LLMs) and has quickly become a major force in artificial intelligence. Unlike earlier AI firms that focused on closed source models, DeepSeek’s open-source approach has allowed researchers and developers worldwide to access the latest AI technology. Over the past two years, DeepSeek has released a series of models that have significantly impacted the AI landscape.

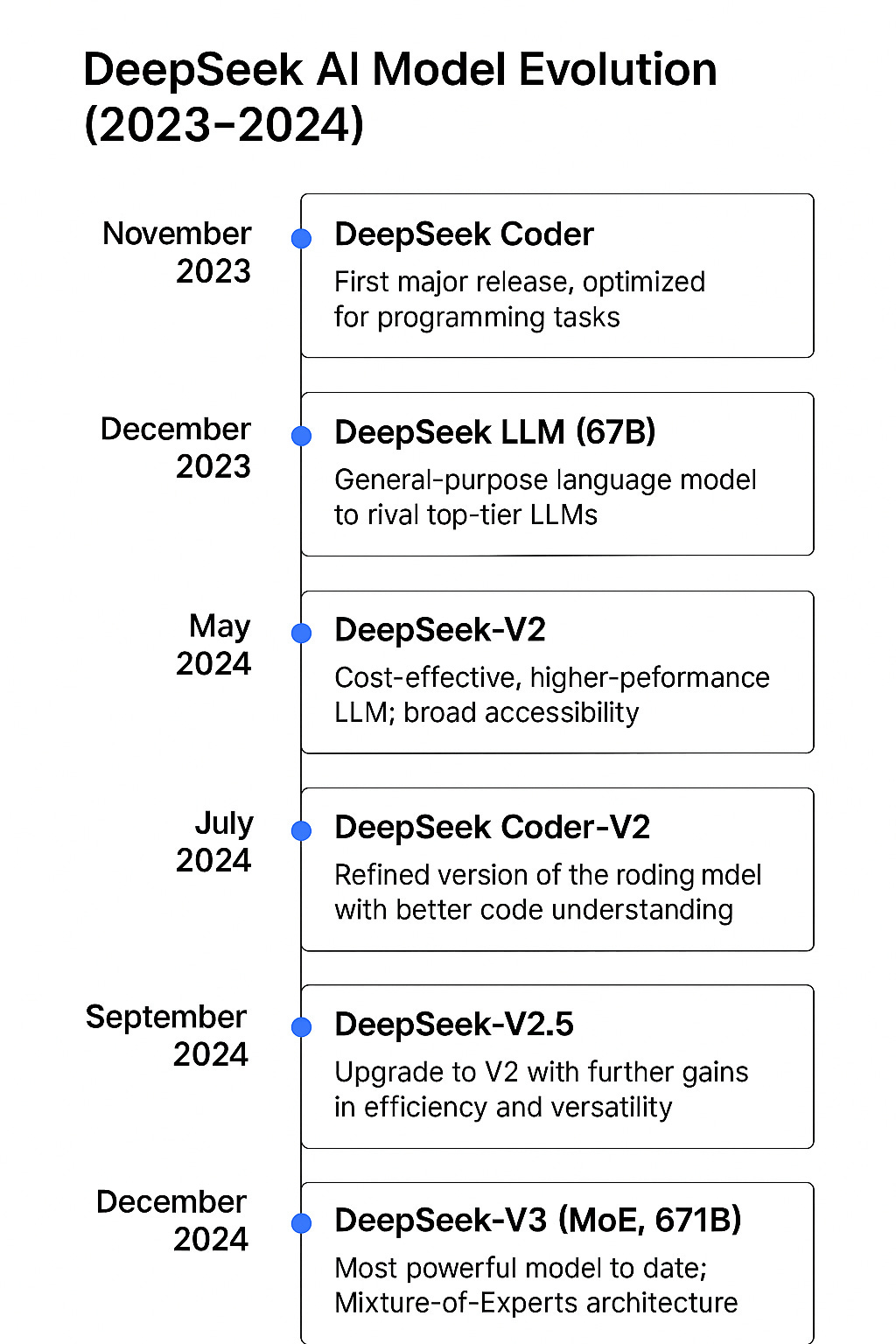

DeepSeek's first major release was DeepSeek Coder in November 2023, a model optimized for programming tasks.

The company followed this with DeepSeek LLM in December 2023, a 67-billion-parameter model designed to compete with leading AI systems.

In May 2024, DeepSeek introduced DeepSeek-V2, a cost-effective model with improved performance, making advanced AI more accessible.

Later that year, DeepSeek launched DeepSeek-Coder-V2 (July 2024) and DeepSeek-V2.5 (September 5, 2024), further refining both general and coding-related capabilities.

The company’s most significant advancement came with DeepSeek-V3 on December 26, 2024, featuring a mixture-of-experts architecture and 671 billion parameters, making it one of the most powerful AI models available.

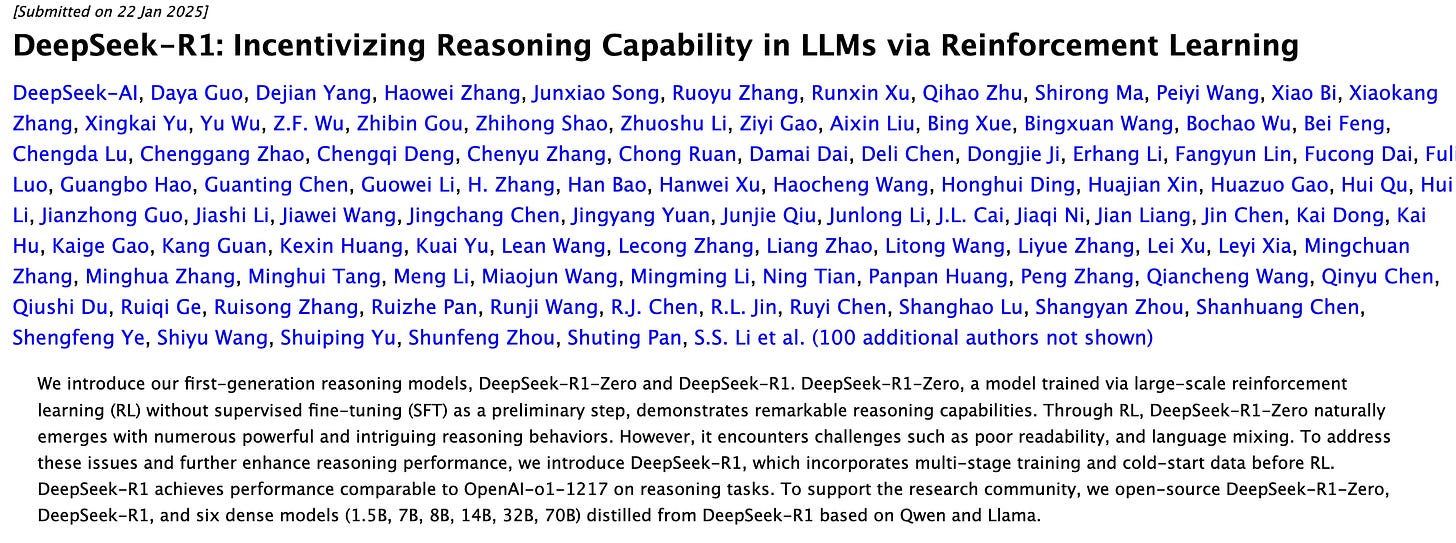

The real turning point came in early 2025 with the release of DeepSeek-R1 on January 20, 2025. This model, designed for advanced reasoning tasks, directly competed with top models from OpenAI and Google.

This led to a major shift in the AI market, with DeepSeek's open-source models outperforming many proprietary alternatives.

2. Why is DeepSeek a turning point in AI History?

DeepSeek's emergence marks a significant turning point in AI history, reshaping market dynamics and influencing global perspectives on technology.

Stock Market Reaction

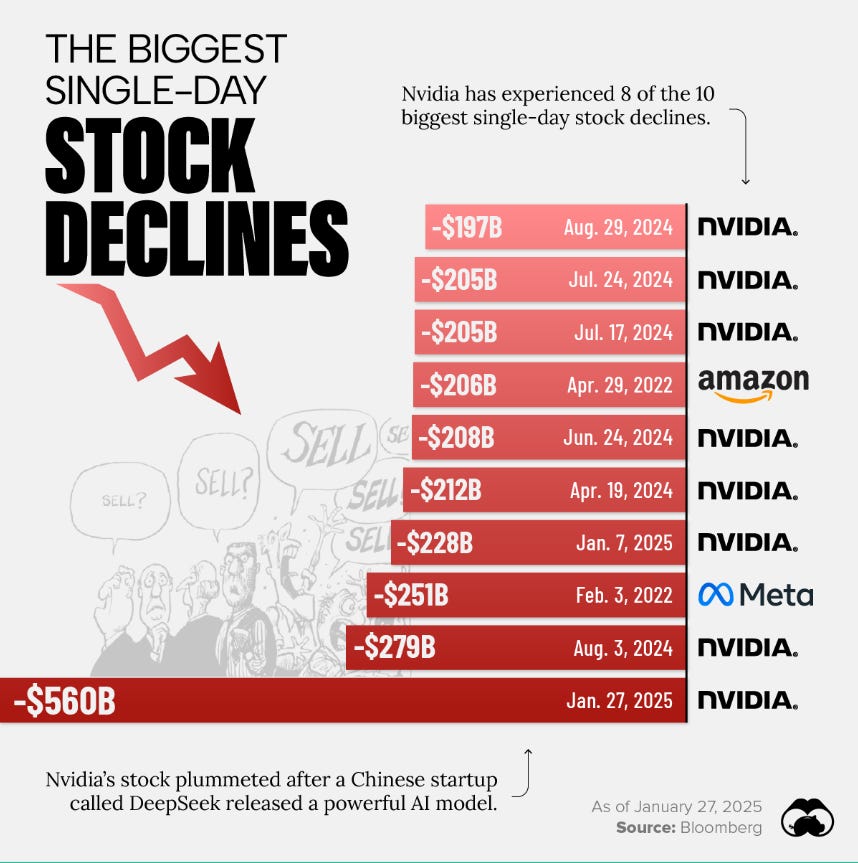

The introduction of DeepSeek's advanced AI model had immediate repercussions on the stock market, notably wiping out approximately $1 trillion from the U.S. markets.

Nvidia was particularly hard hit, experiencing a nearly 17% drop in its stock price, which translated to a loss of around $600 billion in market capitalization.

This dramatic decline was attributed to investor fears regarding the potential reduction in demand for high-end chips, as DeepSeek demonstrated that powerful AI models could be developed at a fraction of the cost and using less sophisticated hardware.

Top AI Companies' Reaction

DeepSeek's advancements have positioned it ahead of established giants like Meta and OpenAI. Notably, DeepSeek's model was reported to be 20 to 50 times cheaper to train and operate compared to OpenAI's offerings, raising concerns across the industry.

Government Response

The rise of DeepSeek has prompted governments, particularly in India, to reconsider their strategies regarding foundational AI models.

With DeepSeek showcasing that competitive AI can be developed at lower costs, there is a growing push among nations to invest in similar technologies to enhance their global standing.

3. What if we learn how to build DeepSeek from scratch?

We have established that DeepSeek models are powerful.

I had one burning question after DeepSeek became popular:

Can we learn how to build the entire DeepSeek architecture from scratch?

This took me on a long journey to understand several concepts such as multi-head latent attention, mixture of experts and multi-token prediction.

I spent a huge amount of time to understand these concepts and then coded every single concept from scratch. The more I learnt about DeepSeek, the more I was amazed of their entire architecture and how it all connects together.

I couldn’t find a single blog or book which explains the nuts and bolts of the DeepSeek architecture.

That is the main purpose of the DeepSeek cookbook which I plan to write.

I want to teach all of you to assemble building blocks of the DeepSeek architecture and code every single aspect of their architecture from scratch.

4. DeepSeek ingredients we will learn about

We will go through theory and code implementation of 5 DeepSeek pillars:

DeepSeek Pillar 1: Multi-Head Latent Attention (MLA)

This section will focus on implementing MLA, a novel attention mechanism that enhances context comprehension beyond traditional attention models.

DeepSeek Pillar 2: Advanced Mixture of Experts (MoE)

Learn how DeepSeek MoE dynamically selects expert models during inference, optimizing efficiency and scalability. We will code MoE from scratch to understand its mechanics.

DeepSeek Pillar 3: Multi-Token Prediction (MTP)

Unlike standard autoregressive models that predict one token at a time, MTP allows for predicting multiple tokens in parallel, improving speed and efficiency. We’ll dive into its implementation and benefits.

DeepSeek Pillar 4: FP-8 Quantization:

We will explore how FP-8 quantization helps reduce model size and improves computational efficiency while maintaining high accuracy.

DeepSeek Pillar 5: Reinforcement Learning

DeepSeek incorporates RL techniques to optimize response quality. We will study:

(a) Proximal Policy Optimization (PPO) – a widely used reinforcement learning algorithm for fine-tuning large models.

(b) Generalized Reinforcement Preference Optimization (GRPO) – an advanced RL method that enhances stability and performance.

Throughout this book, we will explore both the mathematical foundations and practical coding implementations of these concepts.

By the end, you will have a comprehensive understanding of what makes DeepSeek a powerful model and how to build, optimize, and fine-tune similar architectures.

5. My plan for this book

I will aim to write one comprehensive chapter on Substack every week regarding this cookbook.

This will involve powerful visuals, whiteboard demonstrations and Google Colab notebooks.

We will code everything from scratch! Nothing will be assumed.

Stay tuned!

Curious if the book has been launched and if there is a paid course in Vizuara