Welcome to Day 7 of the Vizuara AI Agents Bootcamp! Today’s session dives into Agentic RAG – an advanced approach to Retrieval-Augmented Generation – using the powerful LlamaIndex framework.

By now, you’ve learned the basics of Large Language Model (LLM) agents and frameworks. In this post, we’ll build on that foundation to explore how LlamaIndex enables agentic (agent-driven) retrieval strategies that overcome traditional RAG’s limitations. You’ll get an overview of LlamaIndex’s core components (Components, Tools, Agents, Workflows) and what makes it special, a refresher on how classical RAG works (and where it falls short), and then a step-by-step guide to implementing an Agentic RAG system with LlamaIndex.

Finally, we’ll discuss why evaluation and observability (using tools like Arize Phoenix) are crucial when taking these agents into production.

By the end of this post, you’ll understand how Agentic RAG combines the strengths of retrieval and reasoning: allowing an LLM-based agent to dynamically decide when and how to fetch more information and use various tools to best answer complex queries. Let’s jump in!

Table of Contents

LlamaIndex: Components and Unique Features

Retrieval-Augmented Generation (RAG) Explained

Limitations of Traditional RAG

What is Agentic RAG?

Implementing Agentic RAG with LlamaIndex

Step 1: Loading and Chunking Data

Step 2: Configuring LLM and Embeddings

Step 3: Creating Multiple Indexes

Step 4: Building Query Engines

Step 5: Wrapping Query Engines as Tools

Step 6: Setting Up a Router Query Engine

Step 7: Defining the Agent

Step 8: Running the Agent

Evaluation and Observability in Production

Conclusion and Next Steps

(1) LlamaIndex: Components and Unique Features

LlamaIndex (formerly known as GPT Index) is a comprehensive framework for building LLM-powered applications and agentic systems. It provides a full toolkit that abstracts many lower-level details, so you can focus on high-level logic. LlamaIndex is structured around four main building blocks:

Components: These are the fundamental pieces like data connectors, indices (vector stores, document stores), LLM interfaces, prompts, and parsers. They form the base layer of any application, handling things like document reading, chunking, embeddings, and prompt templates – essentially the “basic building blocks such as prompts, models, databases,” as our notes highlight.

Tools: In LlamaIndex, a Tool is an interface that an agent can use to perform a specific action. For example, a tool might wrap a vector database query, a web search, a calculator, or any external API. Tools expose functionalities that the agent can invoke. Having proper tool abstractions is central to building agent systems – they’re like the agent’s skills or instruments.

Agents: An Agent in this context is an autonomous reasoning entity powered by an LLM. It takes user queries and decides which tools to use (and in what sequence) to produce the best result. LlamaIndex provides both simple, pre-built agents and a way to create custom agents. Agents use the reasoning capability of LLMs to plan steps: e.g. break down a complex query, choose a tool, execute it, then integrate the result into an answer.

Workflows: Workflows are a high-level orchestration system for chaining together components, tools, and agents in structured patterns. A Workflow in LlamaIndex is an event-driven sequence of steps (with support for branching, looping, and even multiple agents working together). Workflows give you fine-grained control for complex applications – but for many use cases, you can get far with a single agent using a handful of tools.

What makes LlamaIndex special? There are a few standout features and modules that set LlamaIndex apart from other agent frameworks:

Clear Workflow System: As mentioned, LlamaIndex’s Workflow abstraction provides a structured way to build both simple and sophisticated agent pipelines. It handles coordination, state management, and event streaming under the hood, allowing you to deploy multi-step, multi-agent processes with relative ease (and without a tangle of spaghetti code).

Advanced Document Parsing with LlamaParse: LlamaIndex offers LlamaParse, a GenAI-native document parsing platform that can intelligently parse complex files (PDFs, PPTs, etc.) into clean, LLM-ready text. Good data quality is essential for RAG, and LlamaParse helps ensure your knowledge base is parsed and structured for optimal LLM consumption.

LlamaHub and Ready-to-Use Tools: LlamaHub is a community-driven library of connectors and tools for LlamaIndex. It provides over 40 pre-built tools (from database readers to web search integrations) that you can plug into your agent. This means you don’t have to write every tool from scratch – you can import tools for things like Google Drive, Slack, or search engines and have your agent use them out-of-the-box. LlamaHub greatly extends the power of your agent with minimal effort.

Plug-and-Play Components: LlamaIndex comes with many ready-made components – e.g., vector store indexes, text splitters, open-source or OpenAI LLM connectors, embedding models, and response synthesizers – all configurable. You can assemble these like Lego pieces. Additionally, LlamaIndex’s design is modular, so you can swap in your choice of LLM, vector database, or embedding model easily.

In short, LlamaIndex is a one-stop framework that goes beyond just retrieval or just prompting – it gives you the pieces to build full agent systems (with memory, tool use, and multi-step reasoning) on top of your data.

Now that we have a sense of the toolkit, let’s revisit Retrieval-Augmented Generation (RAG), since that’s at the heart of today’s topic.

(2) Retrieval-Augmented Generation (RAG) Explained

Retrieval-Augmented Generation (RAG) is a technique for enhancing LLMs with outside knowledge. Instead of relying solely on an LLM’s fixed training data, a RAG system retrieves relevant information from an external knowledge source (documents, databases, etc.) and feeds it into the LLM’s prompt to help generate a more accurate answer. This approach helps reduce hallucinations and keeps answers up-to-date, because the LLM is “augmented” with fresh or domain-specific info at query time.

A typical RAG pipeline has two main parts: a retriever and a generator. The retriever is often implemented with an embedding model + vector database: it takes the user’s query, converts it into an embedding, and performs a similarity search over a vector index of documents to find the most relevant chunks. The generator is usually an LLM that then takes the query plus the retrieved text chunks as input and produces a final answer.

How does traditional RAG work in practice? Suppose we have a collection of company documents and we want to answer questions about them. In a RAG system, we would typically:

Ingest and index the documents: Load all the documents and split them into smaller chunks (paragraphs, slides, sections, etc.) to create a knowledge base. Compute vector embeddings for each chunk and store them in a vector index (or vector database) for fast similarity search.

User asks a question: e.g., “What are the key findings of the 2025 State of AI report?”

Retrieve relevant context: The system embeds the user’s query and looks up similar vectors in the index, retrieving, say, the top k most relevant chunks (passages that likely contain the answer).

Augment the prompt and generate: The retrieved text chunks are appended to the query (along with a prompt template if needed), and this augmented prompt is passed to an LLM. The LLM then generates an answer that hopefully incorporates the information from those chunks.

Return the answer to the user. Ideally, the answer will contain factual details drawn from the documents (often with source citations if implemented), thereby being more accurate and verifiable than what the LLM alone might produce.

In code, using LlamaIndex for a simple RAG setup looks like this (for example):

# Load and chunk documents

reader = SimpleDirectoryReader(input_files=["State_of_AI_2025.pdf"])

documents = reader.load_data()

nodes = SentenceSplitter(chunk_size=1024).get_nodes_from_documents(documents)

# Configure LLM and embedding model

Settings.llm = OpenAI(model="gpt-3.5-turbo")

Settings.embed_model = OpenAIEmbedding(model="text-embedding-ada-002")

# Create a vector index and a query engine over it

vector_index = VectorStoreIndex(nodes)

query_engine = vector_index.as_query_engine()

With this setup, we can ask questions to query_engine and it will perform the RAG steps under the hood: embed the question, retrieve relevant chunks, and query the LLM. For example:

response = query_engine.query("What does the document say about AI adoption trends?")

print(response)

This might output an answer like: “The document notes that 78% of organizations use AI in at least one business function, and the number of functions using AI has increased compared to previous years.” The LLM’s answer is grounded in content fetched from the document, illustrating RAG in action.

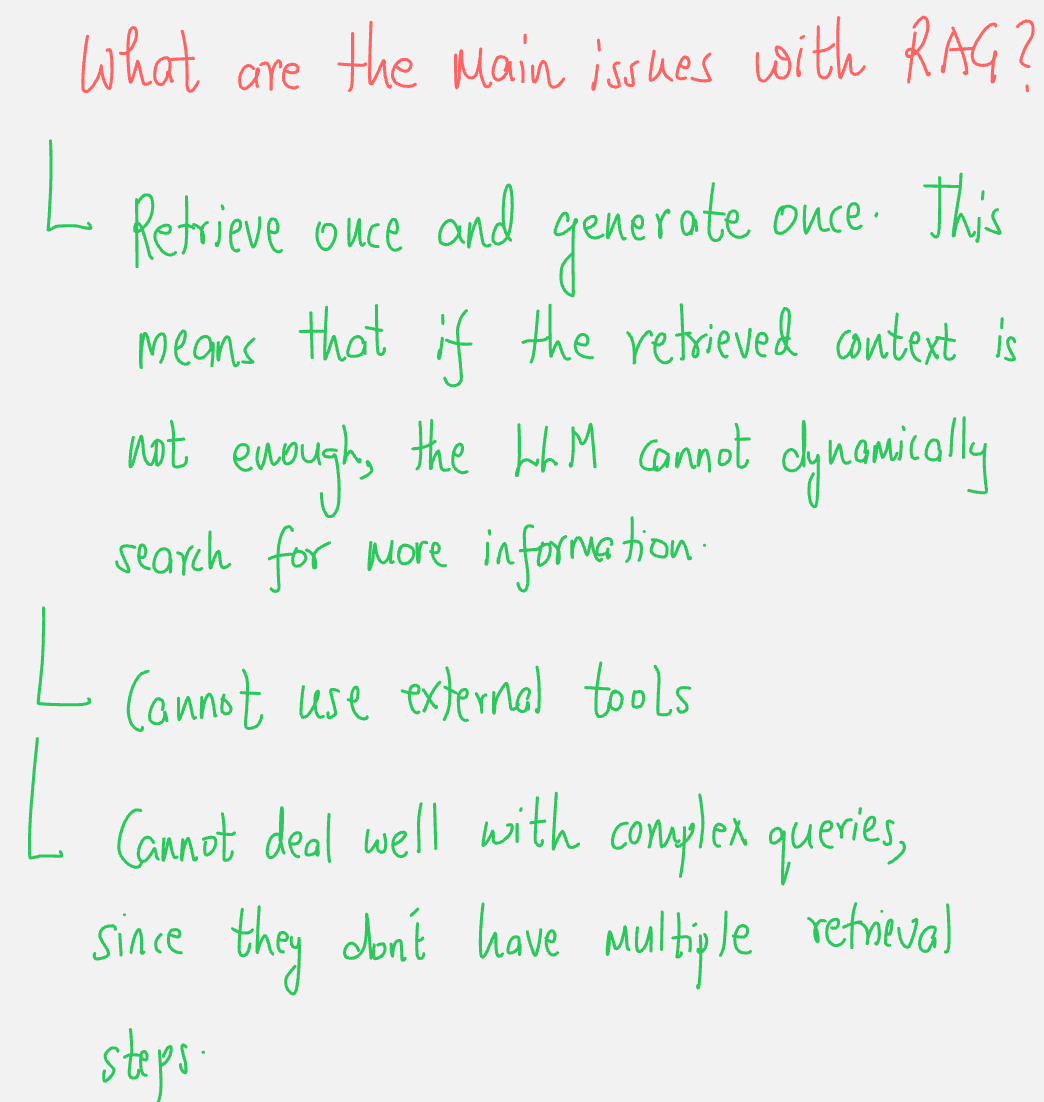

(3) Limitations of Traditional RAG

Traditional RAG has proven immensely useful, but it has some notable limitations that affect its ability to handle complex queries or tasks:

One-shot retrieval and generation: In a basic RAG pipeline, the process is retrieve once, then generate once. The LLM gets a single batch of context. If that context is insufficient or misses relevant details, the LLM won’t know to go back and retrieve more – it will simply do its best with what it has. There’s no built-in mechanism to iteratively refine the retrieval. As one source puts it, many complex tasks “cannot be solved with single-step LLM question answering” – sometimes you need multi-step reasoning and potentially multiple lookups.

Limited to one knowledge source: Naively, RAG assumes all needed information is in one vector database. But what if a question needs multiple sources (e.g. your proprietary docs and the latest web data)? Traditional RAG doesn’t natively handle using heterogeneous sources or APIs. It typically “only considers one external knowledge source,” whereas some solutions require combining multiple databases or calling external tools like web search.

No use of external tools or functions: Standard RAG is geared toward retrieval of text. It doesn’t inherently allow the LLM to use tools like calculators, translators, or other APIs. For example, if the question involves a live data lookup or a calculation, vanilla RAG can’t handle it. LLMs also struggle with certain tasks (like complex math), and without tool use, they may produce incorrect results.

No built-in reasoning or validation of results: The LLM in a basic RAG isn’t cross-checking the retrieved info or planning multiple steps – it’s essentially doing “search → read → answer” in one go. This means if the retrieved chunk is slightly off-topic or if the question actually needs a series of queries, the single-shot RAG will underperform. There’s no mechanism to reflect on the answer and say “actually, I should search again with a different strategy” or to break a complex query into sub-queries.

In summary, traditional RAG is static and flat: one round of retrieval, one round of generation, one data source.

This works for straightforward fact questions but can fall short for more complex tasks that require reasoning, multiple hops, or external knowledge beyond the text corpus.

These limitations set the stage for Agentic RAG – which injects an intelligent agent into the loop to make the process more dynamic and powerful.

(4) What is Agentic RAG?

Agentic RAG refers to a Retrieval-Augmented Generation system that leverages an AI agent to orchestrate the retrieval and reasoning process. In other words, instead of a fixed one-shot pipeline, we use an LLM (in an agent role) that can iteratively decide what to do to answer a query: it can choose which knowledge source or tool to query, whether to retrieve more information, how to combine results, and when to stop and produce an answer. The agent brings reasoning, planning, and tool-use to the RAG pipeline.

An Agentic RAG system addresses the earlier limitations in a few key ways:

Multiple knowledge sources and tools: The agent can have access to various tools – for example, a vector search tool over your docs, and a web search tool, and perhaps a calculator or SQL query tool. It is not limited to one database. This means if a query needs external info or a different modality, the agent can route to the appropriate resource.

Iterative retrieval (multi-step): The agent doesn’t just retrieve once. It can analyze the user’s question, retrieve some context, then ask itself: “Do I have a good answer, or do I need more details?” If more info is needed, it can formulate a new query or use another tool, then retrieve additional context. This loop can repeat multiple times. Essentially, the agent can perform research – searching, reading, and refining before final answer – rather than a single shot.

Reasoning and decision-making: Because the core is an LLM agent following something like a ReAct paradigm (Reason+Act), it can break complex questions into sub-tasks. For example, for a question like “Compare the AI adoption trends in the 2022 and 2025 reports and give key differences,” an agent could decide to retrieve info from the 2022 report, retrieve from the 2025 report, and then synthesize an answer. A non-agentic RAG would struggle to handle that comparison with one query.

Validation and self-monitoring: An agent can be prompted to double-check if its answer seems complete or relevant. It has a kind of feedback loop: after generating an intermediate answer or retrieving context, it can critique or analyze that result and decide on next actions. For instance, it might notice “I haven’t actually answered the second part of the question; let me search for that specifically.” This reduces the chances of the system stopping with an insufficient answer.

In essence, Agentic RAG combines the strengths of RAG with the autonomy of agents.

A concise definition from Weaviate’s blog puts it well: “Agentic RAG describes an AI agent-based implementation of RAG… incorporating AI agents into the RAG pipeline to orchestrate its components and perform additional actions beyond simple retrieval and generation to overcome the limitations of the non-agentic pipeline.”.

The agent becomes the “brain” that orchestrates the retrieval (and any other tools) in order to answer the user’s query effectively.

To give a concrete scenario: imagine asking an Agentic RAG system, “Based on our internal sales report and current stock prices, should we invest more in AI R&D this quarter?” A traditional RAG might only look at the internal sales report (if that’s the indexed data) and give an answer about sales. An agentic system, however, could use one tool to retrieve insights from the internal sales report, use another tool (say a stock price API) to get current stock trends, and perhaps use a calculator tool to crunch some numbers – then synthesize everything. The agent’s ability to juggle these steps is what makes Agentic RAG powerful.

Now that we conceptually understand Agentic RAG, let’s get hands-on: how do we implement an Agentic RAG system using LlamaIndex?

(5) Implementing Agentic RAG with LlamaIndex

LlamaIndex makes it fairly straightforward to build an Agentic RAG workflow. We will walk through the process step by step. In our example, we’ll use two different indexes on a single document (the 2025 State of AI report) to demonstrate how an agent can route between multiple knowledge sources. One index will be a vector index for granular retrieval of specific facts, and the other will be a summary index that can provide high-level summaries of the document. We’ll then create an agent that can decide whether to use the detailed vector search or the summary approach to answer a given question – this mimics an agent deciding between tools for different needs.

(Note: In a real application, your multiple sources could be entirely different data sets or APIs. The approach is similar: wrap each data source in a tool, give them to an agent, and let it pick.)

Step 1: Loading and Chunking Data

First, we need to load our document data and split it into chunks for indexing. For this demo, assume we have the report in a PDF or text file. LlamaIndex provides SimpleDirectoryReader for reading files and SentenceSplitter (or other Node Parsers) for chunking text into nodes.

from llama_index.core import SimpleDirectoryReader, SentenceSplitter

# Load the document

reader = SimpleDirectoryReader(input_files=["State_of_AI_2025.pdf"])

documents = reader.load_data()

print(f"Loaded {len(documents)} document(s).")

# Split document into nodes (chunks)

splitter = SentenceSplitter(chunk_size=1024)

nodes = splitter.get_nodes_from_documents(documents)

print(f"Produced {len(nodes)} text chunks.")

After this, nodes is a list of text chunks (each up to ~1024 tokens). Let’s say the report was loaded as one document and got split into dozens of chunks. These chunks will feed into our indexes next.

Step 2: Configuring LLM and Embeddings

Before creating indexes, we configure which LLM and embedding model to use. LlamaIndex uses a global Settings object where we can set the default LLM and embedding model (you could also pass these in per index or query). For example, we might use OpenAI’s GPT-3.5 as the agent’s brain, and OpenAI’s ADA embeddings for vector similarity:

from llama_index.core import Settings

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

Settings.llm = OpenAI(model="gpt-3.5-turbo")

Settings.embed_model = OpenAIEmbedding(model="text-embedding-ada-002")

This ensures that when we create indexes or query engines, they know how to embed text and what LLM to use for any generative tasks.

Step 3: Creating Multiple Indexes

Now we create two indexes over our data: one vector index and one summary index. LlamaIndex offers various index classes. Here we’ll use VectorStoreIndex for semantic search and SummaryIndex for building a hierarchical summary.

from llama_index.core import VectorStoreIndex, SummaryIndex

# Create a summary index (for high-level overview)

summary_index = SummaryIndex(nodes)

# Create a vector store index (for semantic search)

vector_index = VectorStoreIndex(nodes)

Under the hood, VectorStoreIndex will embed all nodes and store them in an internal vector store. SummaryIndex might build a tree or use an LLM to generate summaries of chunks (depending on configuration). We won’t delve into the internals here, but conceptually, think of summary_index as something that can answer broad questions (“What are the main themes of the report?”) by synthesizing content, whereas vector_index is great for pinpointing specific facts (“What statistic is given for AI adoption?”).

Step 4: Building Query Engines

For each index, we create a Query Engine. A query engine is LlamaIndex’s abstraction that encapsulates how to query a given index (including how to use the LLM to synthesize an answer from that index). We can customize query engines; in our case, we might set the summary index’s engine to use a tree summarization response mode.

# Create a query engine for each index

summary_query_engine = summary_index.as_query_engine(response_mode="tree_summarize")

vector_query_engine = vector_index.as_query_engine()

Now we have summary_query_engine and vector_query_engine – each knows how to answer questions using its respective index. If we called vector_query_engine.query("Who is Lareina Yee?"), it would retrieve the node mentioning that name and the LLM would likely respond with something like “Lareina Yee is one of the authors of the report,” pulling that from the document. If we asked summary_query_engine.query("Summarize the key findings"), it might return a paragraph summarizing major points across the whole report.

Step 5: Wrapping Query Engines as Tools

Next, we will wrap each query engine as a Tool that an agent can use. LlamaIndex provides QueryEngineTool for this purpose. We give each tool a descriptive name or description so the agent knows what it’s for.

from llama_index.core.tools import QueryEngineTool

# Create tool interfaces for each query engine

summary_tool = QueryEngineTool.from_defaults(

query_engine=summary_query_engine,

description="Useful for summarizing the State of AI 2025 report."

)

vector_tool = QueryEngineTool.from_defaults(

query_engine=vector_query_engine,

description="Useful for retrieving specific details from the State of AI 2025 report."

)

Now the agent will be able to choose between these two tools. If the question is broad or asks “Give an overview...”, the summary tool is more useful; if the question is specific (“What statistic is given about X?”), the vector tool is the right choice. We’ve essentially turned our indexes into usable skills for the agent.

Step 6: Setting Up a Router Query Engine

Sometimes, you might want an automated way to decide which tool (or index) to use for a query without fully relying on the agent’s chain-of-thought. LlamaIndex has a concept called a RouterQueryEngine that can take multiple query engines and route a question to the most appropriate one. In fact, under the hood it uses an LLM (a selector) to pick the best tool given the question. This is a form of agent in itself, but a lightweight one.

We’ll create a RouterQueryEngine that uses our two tools. This router will analyze questions and delegate either to the summary engine or the vector engine internally:

from llama_index.core.query_engine.router_query_engine import RouterQueryEngine

from llama_index.core.selectors import LLMSingleSelector

# Create a router that can select between the two query engine tools

router_engine = RouterQueryEngine(

selector=LLMSingleSelector.from_defaults(),

query_engine_tools=[summary_tool, vector_tool],

verbose=True

)

Now router_engine is itself a query engine that, when asked a question, will use the LLM-based selector to choose one of the provided tools (it might log a message like “Selecting summary tool for this question” if verbose is True). For example, if you ask “Summarize the report’s key findings,” the router likely picks the summary tool; if you ask “How many companies reported using AI?” it should pick the vector tool. We can test it on a sample question to verify the routing logic works:

response = router_engine.query("Who is Lareina Yee according to the document?")

print(response)

# (It should retrieve context and answer that Lareina Yee is one of the authors.)

Step 7: Defining the Agent

With our tools ready, we can now define an Agent that uses them. LlamaIndex’s high-level API AgentWorkflow makes it easy to create an agent given a list of tools. We’ll wrap our router_engine itself as a single tool for the agent (so the agent sees it as one big “State of AI Report Assistant” tool, which internally can route to sub-tools). This might seem like an unnecessary indirection, but it simplifies the agent’s job to just one choice – and it showcases how one could add even more tools later (like web search, etc.) to the same agent.

from llama_index.core.agent.workflow import AgentWorkflow

# Wrap the router query engine as a tool for the agent

assistant_tool = QueryEngineTool.from_defaults(

query_engine=router_engine,

name="state_of_ai_report_assistant",

description="Tool to answer questions using the McKinsey 2025 State of AI report."

)

# Initialize the agent with this tool

agent = AgentWorkflow.from_tools_or_functions(

tools_or_functions=[assistant_tool],

llm=Settings.llm,

system_prompt="You are an AI assistant skilled in answering questions about the 2025 State of AI report."

)

Here we provided a simple system prompt to give the agent a bit of context about its role. The agent now has one tool in its toolbox: state_of_ai_report_assistant, which it can call whenever needed. However, recall that tool is actually our router, so indirectly the agent has the capability to do both summarization and detailed retrieval. If we had more tools (say a BraveSearchTool for web search or a CalculatorTool), we could include them in the list as well. In fact, a big advantage of doing Agentic RAG through LlamaIndex is how easily you can plug in additional tools from LlamaHub or your own creation as needs grow (e.g., adding an internet search tool to handle questions beyond your docs).

Step 8: Running the Agent

Finally, it’s time to run the agent on some queries and see Agentic RAG in action. When we call agent.run(query), the agent will use its reasoning chain (ReAct style) to decide if and when to call the state_of_ai_report_assistant tool. Internally, that tool will route to the appropriate index. The agent will then take the tool’s output, possibly reason further, and eventually produce a final answer.

# Ask the agent a complex question

question = "Summarize the key trends in AI adoption and mention the percentage of companies using AI."

response = await agent.run(question)

print(response)

Because this question asks for both a summary (“key trends in AI adoption”) and a specific figure (“percentage of companies using AI”), the agent may do multiple steps. For instance, it might first use the summary tool to get an overview of AI adoption trends, then use the vector tool to find the exact statistic about percentage of companies using AI (78% according to the report), and finally compile the answer. The final answer might read: “The report highlights that AI adoption has grown significantly – organizations are using AI in more business functions than ever. 78% of companies reported using AI in at least one business unit, up from prior years. Key trends include increased use in IT, marketing, and sales, and more focus on governance of AI risks as adoption widens.”

Behind the scenes, the agent made decisions about how to retrieve that info. This demonstrates Agentic RAG: the LLM agent actively chose what to retrieve (and retrieved twice with different objectives) before answering. If the initial summary lacked the percentage, the agent knew to dig deeper with another tool – something a static RAG system would not do.

Implementation Note: The above code is simplified for exposition. In practice, ensure you handle asynchronous calls (notice the await agent.run(...) because LlamaIndex agents may be async), and you’d likely want to catch errors or timeouts. Also, the verbose logs from the router and agent can be very helpful to see the reasoning process (they might show messages like “Agent decides to use the retrieval tool for detailed info...”). During development, that insight is gold for debugging agent behavior.

Now that we have an Agentic RAG system working, we should be able to tackle a wide range of queries on our document, from simple factoids to high-level analysis, with the agent dynamically using the best approach for each.

(6) Evaluation and Observability in Production

Building a clever agent is only half the battle – we also need to evaluate it and monitor it, especially if we plan to deploy it in a real-world setting or as part of a product. Agents can be unpredictable (they are generative, after all), so having tools to trace their reasoning and measure their performance is crucial. This is where observability platforms like Arize Phoenix come into play.

Arize Phoenix is an open-source LLM observability and evaluation platform. Integrated with LlamaIndex, Phoenix allows you to trace each step the agent takes and evaluate outcomes for quality, correctness, latency, etc. In other words, it helps answer questions like: What did the agent do to arrive at this answer? Which tools did it use? How long did each step take? Did it hallucinate or produce an off-topic answer anywhere? – all extremely important for debugging and improving your agent.

LlamaIndex offers a callback handler for Phoenix (as we saw in the code, llama-index-callbacks-arize-phoenix). Once set up, it can capture each event in the agent’s workflow. For example, every time the agent calls a tool, that can be recorded as a trace span; the final answer and any intermediate reasoning can be logged too. Phoenix then provides a user interface to visualize these traces. You might see a tree of the agent’s actions for a given query: first a tool call (with its input and output), then the next thought, then another tool call, and so on, ending with the answer. This visibility is incredibly helpful to understand why an agent responded the way it did.

In production, such observability isn’t just a nice-to-have; it’s essential for trust and reliability. Imagine deploying the agent to users – you want to ensure it’s giving correct answers and you want to catch and fix issues early (like an agent action that consistently times out or a prompt that yields an unwanted style of answer). Phoenix provides an evaluation dashboard for exactly this kind of oversight. You can replay problematic queries, see each step the agent took, and identify where things went wrong (e.g., it retrieved irrelevant info, or the LLM interpreted something incorrectly).

To summarize, evaluation and observability tools like Arize Phoenix play a crucial role in productionizing Agentic RAG systems. They give you insight into the “brain” of your agent, help quantify its performance, and even enable automated quality checks (with LLM-based evaluators). When building with LlamaIndex, you can easily integrate Phoenix by installing the callback and adding a line to set the global handler, set_global_handler(PhoenixCallbackHandler(...)). From then on, every query/response can be logged and analyzed.

As the Phoenix docs note, “Phoenix makes your LLM applications observable by visualizing the underlying structure of each call…and surfacing problematic spans of execution based on latency, token count, or other metrics.”. This kind of insight is invaluable for refining your agent before and after deployment.

(7) Conclusion and Next Steps

Day 7 has been all about integrating retrieval with agent reasoning – we learned how Agentic RAG allows an LLM agent to overcome the constraints of traditional RAG by using tools, multiple data sources, and iterative planning.

We explored LlamaIndex’s robust features (Components, Tools, Agents, Workflows) that make implementing such an advanced system much easier, and we walked through a concrete example of building an agent that can both summarize and retrieve specifics from a document. We also highlighted the importance of observing and evaluating our agents, because even the smartest agent benefits from a watchful eye and continuous improvement.

As we move forward, the possibilities opened up by Agentic RAG are vast. You can extend the example we built: plug in more tools from LlamaHub, connect to real-time data, or even orchestrate multiple agents working together.